Why to Clean Data in Power BI & Essential Steps to Not Miss

Section

Imagine steering a ship with a faulty compass—that’s what it’s like making decisions based on unrefined data. Inaccurate or inconsistent data can lead to misguided strategies, missed opportunities, and financial losses. In fact, companies lose an average of $12.9 million annually due to poor data quality.

Power BI’s robust features, like Power Query, empower you to prepare and modify data before analysis. By removing duplicates, correcting formatting issues, and resolving inconsistencies, you can trust that your data reflects reality.

Another misstep when deploying Power BI in organizations for the first time is that many users go straight for creating visuals, overlooking a critical step that ensures accuracy and reliability in their reports. This oversight can lead to misleading insights and flawed business decisions.

In this blog, we’ll explore the importance of comprehensive data preparation in Power BI and highlight a commonly missed step that could be the key to unlocking more precise and actionable insights.

Risks of Poor Data Quality in Power BI

High-quality, structured data is the foundation of reliable business intelligence. Poor data quality – whether due to inaccurate values, inconsistencies from different sources, or outdated information – can severely undermine the value of Power BI analyses. The old adage “garbage in, garbage out” applies: if you feed Power BI with bad data, it will produce misleading reports that lead to flawed decisions.

Below we outline key risks of poor data quality and how Microsoft recommends mitigating them, with a focus on business intelligence dashboards and data-driven decision making.

Misleading Reports from Inaccurate Data

Inaccurate or incomplete data in Power BI can directly result in misleading reports. When figures are wrong or definitions inconsistent, the visuals and KPIs on business intelligence dashboards no longer reflect reality. Microsoft warns that “out-of-date reports cause misinterpreted insights” – in other words, decisions based on stale or erroneous data can send the business in the wrong direction.

For example, before Microsoft’s own teams unified their data, different departments produced separate reports with conflicting numbers, leading to contradictory conclusions. In one case, each team calculated market share differently, which “led to some apparent contradictions” in meetings. By consolidating these disparate sources into one Power BI dashboard (a single source of truth), Microsoft achieved alignment and eliminated confusion. This highlights the impact of poor data quality: when data isn’t clean or consistent, even well-designed Power BI visuals can deceive. Ensuring clean data in Power BI is therefore not just a technical exercise – it’s essential for trustworthy insights.

To maintain accuracy, it’s critical to verify data at every stage. Microsoft’s guidance emphasizes validating data early in the process, not waiting until after reports are generated. “Validate early,” the Cloud Adoption Framework advises – catch errors at ingestion or during transformation, before they proliferate into dashboards.

In practice, this means using Power BI’s data transformation tools (like Power Query or dataflows) to apply data quality checks and correct issues before building reports. Taking these proactive steps helps avoid the scenario of faulty decision-making from bad data, preserving the integrity of your insights.

Financial and Operational Consequences

The financial and operational consequences of poor data quality can be significant. When data is wrong, businesses often incur extra costs and effort to reconcile or integrate it. Microsoft notes that “inconsistent data could lead to high integration costs” – teams spend time and money manually fixing data issues or merging inconsistent datasets. There’s also an opportunity cost: resources get diverted to firefighting data problems instead of driving innovation. Inaccurate data can trickle down into planning errors, inventory mistakes, or misguided marketing spends, each carrying a price tag.

Operationally, bad data can sabotage advanced initiatives. For instance, “poor data quality sabotages new AI models” according to Microsoft’s Power BI team. Analytics and machine learning rely on large volumes of clean, structured data; if that data is riddled with errors, predictive models yield skewed results or even fail. This not only wastes the investment in AI projects but also delays insights that could have improved efficiency or revenue. Microsoft cautions that all these issues “stifle the ability to unlock the full value of data, hampering innovation”.

Clean Data for Better Decisions. Deploy Power BI Right.

The Role of Data Engineering in Ensuring Data Quality

While many users focus on utilizing Power BI’s built-in tools for data cleaning and transformation, they often overlook the critical role of data engineering in this process. Data engineering involves designing and managing the infrastructure that ensures data is accurate, consistent, and accessible before it even reaches Power BI. By focusing on the extraction, transformation, and loading (ETL) of data, data engineers ensure that the information feeding into Power BI is accurate, consistent, and reliable.

Data Integration and Consolidation

Data engineers are responsible for integrating data from various sources—such as databases, cloud platforms, and third-party APIs—into a unified system. This consolidation provides a comprehensive view of the organization’s data, enabling more insightful analysis and reporting in Power BI. By creating robust data pipelines, engineers ensure that data is consistently and accurately collected, reducing the risk of discrepancies and errors.

Data Cleaning and Transformation

Before data reaches Power BI, it must be cleaned and transformed to ensure suitability for analysis. Data engineers employ various tools and techniques to remove duplicates, handle missing values, and standardize data formats. Power Query, for instance, is a valuable feature within Power BI that allows for extensive data transformation and cleaning, enabling users to prepare their data effectively for analysis.

Establishing Data Governance and Quality Standards

Implementing data governance frameworks is another critical responsibility of data engineers. By setting data quality standards and monitoring compliance, they ensure that the data used in Power BI analyses is both reliable and secure. This involves creating documentation, maintaining data catalogs, and establishing protocols for data access and usage, all of which contribute to the overall trustworthiness of the data.

Collaboration with Analysts and Stakeholders

Data engineers work closely with data analysts and other stakeholders to understand their data needs and challenges. This collaboration ensures that the data infrastructure aligns with business objectives and that the data provided supports effective decision-making. By facilitating clear communication between technical and non-technical teams, data engineers help bridge the gap, ensuring that data solutions are both technically sound and business relevant.

In summary, data engineering serves as the backbone of high-quality data management, directly influencing the effectiveness of Power BI reports and dashboards. By investing in robust data engineering practices, organizations can enhance their data quality, leading to more accurate insights and informed decision-making.

Microsoft’s Best Practices for Data Quality

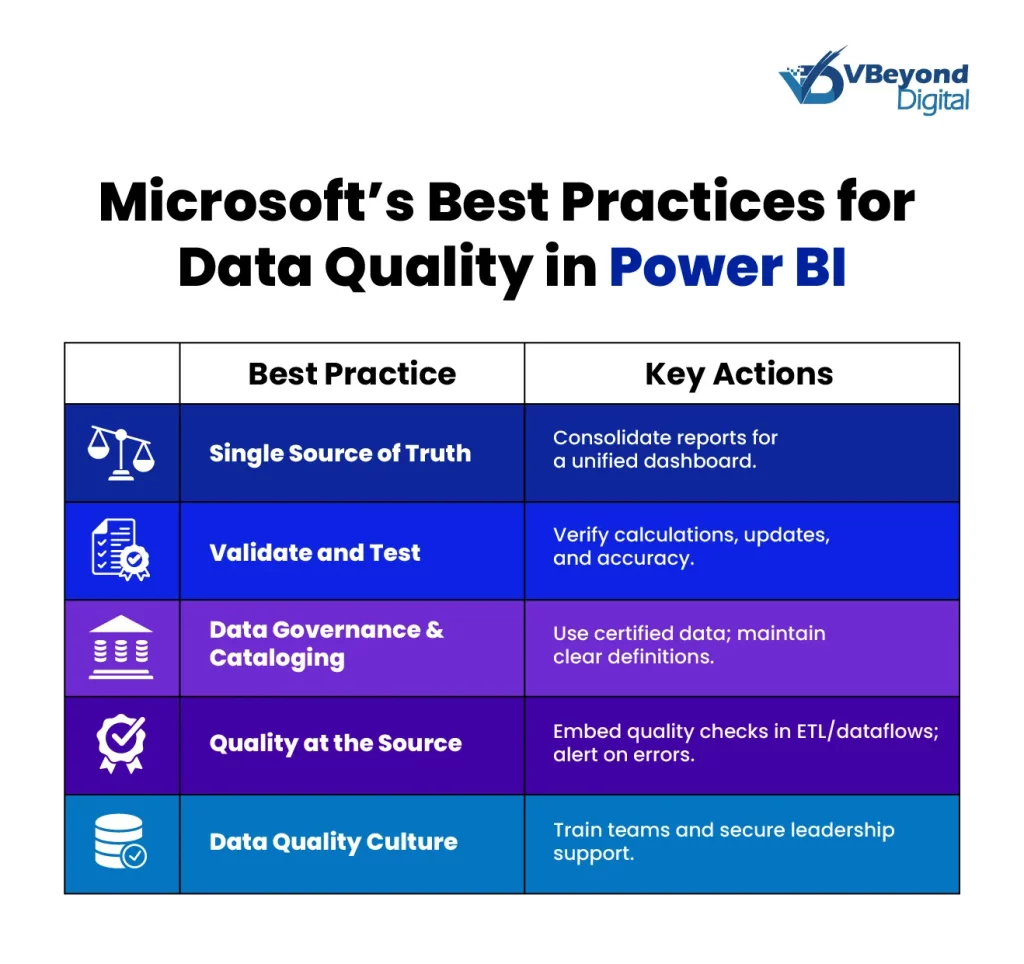

Microsoft has documented several best practices to prevent data quality issues and preserve trust in Power BI content. These practices, drawn from Microsoft’s own implementations and guidance, include:

- Establish a Single Source of Truth: Eliminate conflicting reports by consolidating data. Several case studies will show that moving from siloed, duplicate reports to one central Power BI dashboard “creating a single source of truth” significantly improves alignment. Executives and teams should be looking at the same structured data rather than multiple versions of the truth.

- Validate and Test Content Thoroughly: Before deploying a dashboard or report, perform rigorous validation. “Validating content is critical to ensure the quality and trustworthiness” of BI solutions, Microsoft advises. This means checking that calculations match expected results and that data updates correctly. Microsoft’s Power BI implementation guides suggest creators should test their data accuracy against known baselines. By catching errors early, you prevent end users (especially executives) from seeing mistakes that would reduce their trust.

- Implement Data Governance and Cataloging: Good data governance helps maintain quality. Microsoft recommends encouraging the use of trusted, high-quality data sources and maintaining a data catalog. By endorsing certified datasets and documenting data definitions, organizations ensure everyone uses consistent data in their Power BI reports. This reduces inadvertent errors and builds user confidence that the data is vetted. Tools like Azure Purview (Microsoft’s data governance service) integrate with Power BI to help achieve this. In fact, the integration of Power BI with Purview enables users to easily find trustworthy data and trace lineage, reinforcing confidence in where the data comes from.

- Address Data Quality at the Source: Microsoft’s Cloud Adoption Framework suggests embedding quality checks as data is ingested or prepared. For example, define global data quality rules (completeness, validity, consistency) and apply them in ETL or Power BI dataflows. If data fails these rules – say, a batch contains missing values or out-of-range metrics – stop the load and alert data owners. This proactive approach prevents bad data from ever reaching your dashboards. Microsoft even provides solutions like Microsoft Purview Data Quality, which can automatically assess and score datasets, sending alerts for anomalies. This service is “crucial for reliable AI-driven insights and decision-making”, underlining that quality data is a prerequisite for trustworthy analysis.

- Foster a Data Quality Culture: Finally, Microsoft emphasizes the human aspect – training and processes. Encourage a culture where analysts and report creators take responsibility for data correctness. The Power BI data cleaning guide (for instance, Microsoft’s Power Query training) notes that professionals spend countless hours ensuring data is clean, and that modern tools can automate much of this work. As Microsoft’s guidance on data culture suggests, executive support for governance and data quality initiatives is key to success. When leaders actively champion data quality, it reinforces its importance across the organization.

Power BI’s Data Cleaning and Validation Capabilities

One of the reasons Power BI is widely used for enterprise analytics is its strong set of built-in tools for data cleaning and validation. These capabilities help mitigate the risks of poor data quality by catching issues early and standardizing data from disparate systems:

- Power Query for Data Transformation: Power BI comes with Power Query, a powerful data connection and transformation engine. It allows users to connect to data from different sources (databases, files, cloud services) and then clean and shape that data through an intuitive interface. Microsoft describes Power Query as a tool that transforms and automates data preparation, “giving you time for analysis and for driving business impact” instead of manual cleanup. With Power Query, analysts can remove duplicates, handle missing values, split or merge columns, enforce data types, and apply business rules – all steps that ensure you have clean data in Power BI models. This is essentially a data cleaning guide built into the tool: users can apply a rich library of transformations without coding, and even less-technical business users can make sure their data is analysis-ready.

- Dataflows and Data Integration: For more complex or enterprise-scale data preparation, Power BI dataflows provide a code-free ETL solution in the cloud. Dataflows let teams create reusable data transformation pipelines that ingest data from various sources and output standardized, cleaned tables. According to Microsoft documentation, “dataflows provide a code-free experience to transform and clean data” as an alternative to writing custom data pipeline code. This is particularly useful when dealing with large volumes of data or recurring data quality tasks. Dataflows can enforce consistent cleaning logic (for example, unifying date formats or product codes) across all reports in an organization. By centralizing these transformations, Power BI ensures that every dashboard is built on the same cleansed, structured data foundation, reducing the risk of inconsistent metrics.

- Data Profiling and Validation Features: Power BI Desktop includes data profiling visuals that help identify quality issues during data import. These profiling tools show distributions, identify nulls or outliers, and highlight potential errors in each column. This enables analysts to spot anomalies (like negative values where there should be none, or unexpected text entries in a numeric field) and fix them before loading data into visualizations. Moreover, Power BI’s scheduled refresh and alerts can be configured to validate that data updates correctly. If a refresh fails or a dataset breaks a validation rule (for instance, a total that doesn’t reconcile with a control total), developers can be notified to investigate, preventing bad data from silently creeping into reports.

- Content Certification and Endorsement: To bolster trust, Power BI provides a mechanism to certify and endorse datasets and reports. Organizations can mark certain data sources as certified (after thorough verification) or promoted as reliable. This governance feature works together with data quality efforts – it guides users to use the right, clean data. Microsoft’s adoption roadmap recommends “encouraging the use of trusted high-quality data sources”. Executives accessing a Power BI dashboard that is built on a certified dataset can have additional confidence in its accuracy, knowing it passed strict quality checks.

- End-to-End Data Lineage and Traceability: Through integration with tools like Azure Purview and the built-in lineage view, Power BI enables users to trace data from the dashboard back to the source. This transparency is critical when questioning a number – an executive can see that a sales figure on a dashboard comes, for example, from a specific ERP system table last updated that morning. Such lineage information, combined with metadata (descriptions, owners, refresh time), helps stakeholders trust the data. They know that the data isn’t some black box; it’s auditable and managed. Microsoft highlights that with Power BI and Purview, “analytics users can build and trace key business metrics to the origins of the data”, reinforcing trust in those metrics

Conclusion

Poor data quality is more than just a technical nuisance – it’s a business liability that can misguide strategy, inflate costs, and erode trust at the highest levels. Microsoft’s own experience and official guidance make it clear that ensuring data accuracy, consistency, and cleanliness is essential for effective data in decision making. The impact of poor data quality manifests in everything from erroneous day-to-day reports to hesitation in the boardroom about whether to believe the numbers. The good news is that with robust tools like Power BI and Azure Purview, coupled with strong data governance practices, these risks can be actively managed. By investing in data cleaning and validation processes (for example, using Power BI’s built-in features to unify data from different sources and catch errors early), organizations equip themselves to make decisions based on truth, not tangled data. In doing so, they protect their financial health, streamline operations, and maintain the confidence of their executives and stakeholders in the era of business intelligence dashboards and advanced analytics.

FAQs

Clean data in Power BI helps produce accurate reports and reliable visuals. This practice reduces mistakes that may lead to wrong business conclusions and extra costs. It also builds trust among decision makers who rely on correct figures for their choices.

Poor data quality can lead to misleading charts and key figures that do not reflect actual performance. It may cause conflicting numbers across reports and raise costs for fixing errors, reducing confidence in the information presented.

Power BI offers tools like Power Query and dataflows to modify raw data. These features allow users to remove duplicates, fix formatting issues, and verify accuracy before reports are created. Built-in data profiling helps spot errors early in the process.

Following best practices—such as using a single data source, testing data at the input stage, and employing a catalog for data definitions—helps keep data accurate. These measures reduce errors and lower the need for manual corrections, leading to more trustworthy insights.

Checking data at the start of the process catches errors before they affect reports. This practice reduces incorrect information, saves extra work later, and builds confidence in the figures shown on dashboards.