A Guide to Why Data Engineering Services are Important for Modern Enterprises

Section

- Enterprise-grade data engineering builds the backbone for reliable, scalable, and real-time data use across departments.

- Microsoft Azure Services like Data Factory, Data Lake Storage, and Integration Services offer a strong, connected foundation.

- A solid data engineering framework reduces manual fixes, improves data trust, and accelerates decision-making across the business.

- Clean, well-structured data is essential for powering analytics, business intelligence, and AI initiatives at scale.

Every enterprise wants to be data-driven, but most hit the same wall—bad infrastructure. Data is scattered, poorly managed, and no one trusts the reports. That’s where data engineering services for enterprises step in. When done right, they bring order, reliability, and speed to everything built on top of data.

It’s not about hype or theory. It’s about creating systems that work—at scale, under pressure, across teams. Whether you’re a CTO, CIO, or IT lead, your stack won’t survive long without a proper data engineering framework. It holds the entire system together, from raw ingestion to the polished dashboards your leadership sees.

Read more as we go over the importance and urgency of data engineering as a foundation for high-quality digital outcomes and achieving real success with your AI and analytics adoption.

Why Data Engineering Services Matter for Enterprises

Startups can get by with patched-together tools and manual workflows. Enterprises don’t have that luxury. The volume, complexity, and risk are all higher. And that means the focus has to shift from quick wins to long-term reliability. That’s where advanced data engineering plays a critical role—it scales with your business and keeps pace with your goals.

Let’s put this in real terms. A global healthcare provider needs real-time access to patient history from hundreds of systems. A bank wants to scan millions of transactions for fraud in under a second. A retailer runs campaigns based on live customer behavior across regions. These aren’t basic analytics problems. They’re engineering problems that need to be solved for the present and the future. However, pursuing immediate solutions without considering long-term implications can lead to technical debt, undermining system integrity and scalability.

Technical debt in data engineering refers to the long-term costs and challenges that arise from implementing quick, suboptimal solutions to meet immediate business needs. These shortcuts—such as inadequate documentation, poor data modeling, or reliance on manual processes—can lead to multiplied maintenance costs and effort, reduced system reliability, and scalability roadblocks. For instance, every additional data transformation can introduce complexity, slowing down development and increasing maintenance costs. Hence, addressing technical debt proactively is essential to maintain the agility and efficiency of data systems in the long run.

With a well-planned strategy on data engineering and processes built into IT operations, enterprises can see outcomes such as:

- Data Collection at Scale: You’re pulling data from thousands of sources—ERP, CRM, sensors, user interactions, 3rd-party APIs. That data isn’t clean or consistent. A proper data engineering framework ensures every stream is tracked, versioned, and transformed in a controlled way.

- Storage That Grows with You: This is where tools like Microsoft Data Lake Storage come in. It handles raw, unstructured, semi-structured data—across departments, business units, even continents. It gives your teams a single place to store massive amounts of data without rigid schema requirements.

- Processing That Actually Performs: This is not just about “transformation logic.” You need repeatable workflows that can process millions of records on schedule, or in real-time. That’s where Microsoft Azure Data Factory becomes critical. You can schedule jobs, set dependencies, and move data through complex pipelines without building everything from scratch.

- Azure Integration Services: Whether your systems run on SAP, Salesforce, custom APIs, or legacy databases, integration can’t be an afterthought. Azure integration services let your engineering teams connect all these sources to the central data system. It simplifies orchestration, keeps dependencies clean, and reduces breakpoints.

- Monitoring and Control: If a pipeline breaks at 3AM, someone has to know. Data engineering services include logging, alerts, and data quality gates that prevent bad data from showing up in reports or models. And yes—auditing and traceability are built-in.

There’s a reason data engineering services are one of the fastest-growing IT service areas. You’re not just trying to “handle more data.” You’re trying to handle it right—with consistency, traceability, and speed.

Companies running their IT infrastructure on Microsoft Azure Services have a head start. With native tools like Azure Data Factory, Microsoft Data Lake Storage, and Azure Integration Services, they’re able to build end-to-end pipelines faster, with fewer moving parts. These tools plug directly into a broader Azure ecosystem, which matters when you’re building systems meant to scale across departments and continents.

For enterprises with serious workloads, advanced data engineering services aren’t optional—they’re the only way to avoid collapse under complexity.

Next, we’ll break down the components of this system: from ingestion to pipelines, to how the whole thing stays alive under pressure.

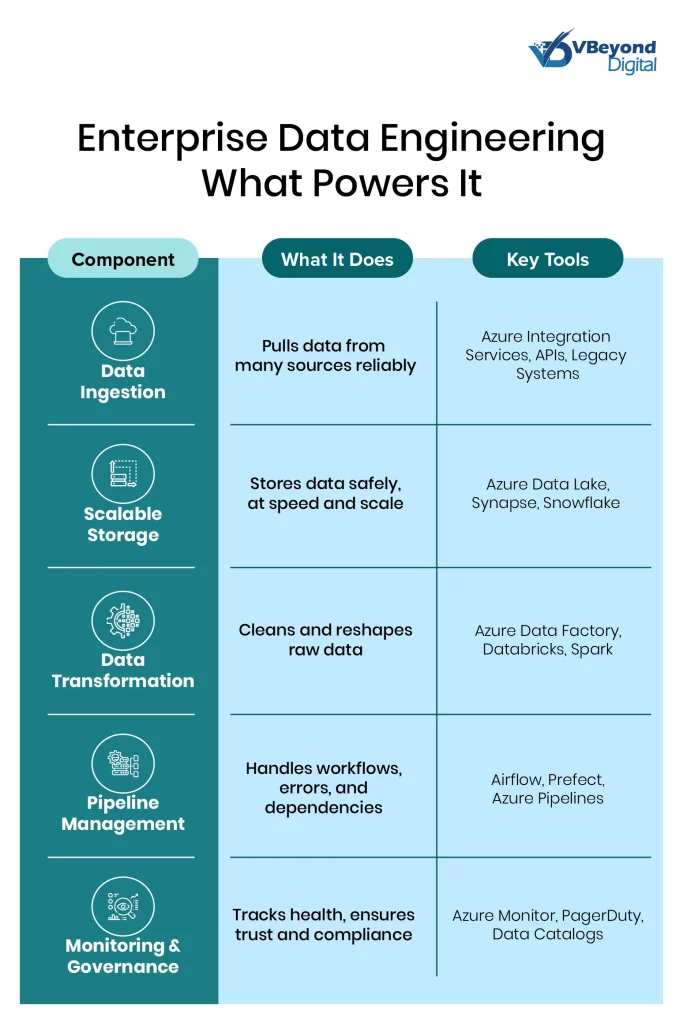

Core Components of Enterprise-Grade Data Engineering

It’s one thing to build a few scripts that move data around. It’s something else entirely to set up data engineering services for enterprises that run daily, handle millions of rows, and support real business outcomes. Here’s what that looks like under the hood—broken down into the real, working parts of a strong data engineering framework.

- Data Ingestion and Collection

This is where everything starts. Enterprises have dozens—sometimes hundreds—of data sources: customer apps, internal systems, sensors, 3rd-party APIs, external vendors. Good data engineering means building systems that pull from these sources without breaking, losing data, or corrupting formats.

Using Microsoft Azure Integration Services, teams can create automated ingestion pipelines from sources like Dynamics 365, SAP, Salesforce, or legacy databases. This takes away the usual pain of manual ETL jobs and allows engineering teams to focus on processing and delivery.

- Data Storage Built for Scale

Once the data comes in, it needs to live somewhere secure, fast, and flexible. This is where Microsoft Data Lake Storage plays a vital role—especially for unstructured or semi-structured data like logs, JSON, or sensor feeds.

For structured data, enterprise-grade data engineering services often rely on cloud data warehouses like Azure Synapse or Snowflake. The important part isn’t the brand—it’s the structure. You want separation of raw, cleaned, and curated data zones. This keeps the flow clean, prevents errors from spreading, and makes auditing possible.

- Transformation and Processing

Raw data by itself is of no use to businesses. It’s full of gaps, duplicates, weird formats, and timestamp mismatches. This is where advanced data engineering matters. You need processing layers that clean, transform, and validate the data—ideally in a reproducible, testable way. Microsoft Azure Data Factory is the go-to tool here. It gives engineering teams the ability to schedule jobs, build reusable pipelines, and move data through a well-defined flow—without hardcoding everything. You can also connect ADF with Databricks or Spark-based systems if more advanced processing is needed.

- Pipelines and Workflow Management

A real pipeline doesn’t just move data. It manages dependencies, retries failures, and ensures consistency. This means you need a workflow engine. Most enterprise setups rely on tools like Airflow, Prefect, or native Azure tools to handle this layer.

These workflows keep track of job status, alert on failures, and allow for modular builds. You don’t need to rerun a full pipeline because one step failed. Good orchestration saves time, cuts down manual work, and reduces the chance of human error.

- Monitoring, Testing, and Governance

Every pipeline should be monitored. Every output should be tested. And every transformation should be traceable. Data engineering services must include baked-in monitoring—ideally connected to existing IT alerting systems like PagerDuty or Azure Monitor. Lineage tracking and data cataloging are also key, especially in regulated industries like finance and healthcare. These aren’t add-ons—they’re part of any reliable data engineering framework.

Without this level of setup, your team ends up firefighting. You’re fixing broken reports, answering angry emails, and explaining why a dashboard shows 0 revenue. With these components in place, your data stack runs like any other critical system—reliable, tested, and maintainable.

In the next section, we’ll look at what this system enables: faster decisions, smoother operations, and fewer surprises. We’ll also get into how this directly connects to business goals.

Business Outcomes from Strong Data Engineering

Good engineering isn’t just about clean code or reliable systems—it’s about what those systems make possible. For enterprises, the value of data engineering services shows up in how decisions are made, how fast teams move, and how much is automated across departments. Let’s look at some specific outcomes and impact.

Faster, Smarter Decision-Making

When data is cleaned, processed, and delivered on time, business teams stop guessing. They can trust the numbers. That alone changes everything. Marketing spends are diverted optimally, finance sees risk earlier, and operations teams knows where the bottlenecks are.

With the right data engineering framework, CIOs and CTOs can roll out BI tools across the organization without constant data complaints. Dashboards load faster, metrics are accurate, and leaders get to base strategy on facts, not assumptions.

Operational Efficiency

Manual processes eat time. Bad data causes rework. Engineering teams spend more time fixing pipelines than building new ones. With proper advanced data engineering, all that waste drops.

Take Microsoft Azure Data Factory—once your pipelines are automated and scheduled, you don’t have to touch them every day. Connect that with Microsoft Azure Integration Services, and now you’ve got a real flow between apps, tools, and systems, with minimal manual oversight. This means faster turnarounds, fewer delays, and better use of your engineering team’s time.

Scalability Without Chaos

Adding new products, services, or markets shouldn’t mean rebuilding your data stack from scratch. Good data engineering services for enterprises support growth—they’re built to scale, both in terms of data volume and organizational complexity.

Using services like Microsoft Data Lake Storage, your teams can handle huge amounts of raw data without running into storage or performance issues. Plus, since it separates raw and refined zones, it’s easier to onboard new teams or projects without breaking the whole system.

Compliance and Audit-Readiness

For industries like finance, healthcare, and energy, data isn’t just for analysis—it’s also subject to strict regulation. GDPR, HIPAA, SOX—whatever applies, your system needs to prove that data is tracked, processed correctly, and protected.

Enterprise-grade data engineering services build in logging, versioning, lineage tracking, and access control from the start. This way, your compliance team isn’t chasing evidence, and your systems are audit-ready without extra work.

Real-Time and Predictive Use Cases

Once your pipelines are stable, you can move into higher-value areas—real-time alerts, machine learning models, predictive analytics. But none of that works without a solid base.

That’s where services like Microsoft Azure Services matter. They tie together storage, processing, analytics, and AI in one ecosystem. You can move from batch pipelines to real-time streaming, or from static dashboards to predictive models—without switching platforms or rebuilding everything.

Bottom line: when the data engineering is done right, you don’t just “get better data.” You get a faster, leaner, and more responsive business. Every department benefits. Leadership has more clarity. And your tech team spends less time fixing problems—and more time pushing forward.

Next, we’ll look at how to actually integrate these systems using Azure tools, and what an enterprise-ready setup can look like from day one.

Building a Scalable System with Azure Data Engineering Tools

You don’t need a dozen disconnected tools to build a strong data platform. If your enterprise is already working with Microsoft products—or planning to—the Azure ecosystem offers everything you need to roll out serious data engineering services. It’s not just about convenience. It’s about tight integration, security, and scale.

Here’s how to put the pieces together using Microsoft Azure Services, and what that setup looks like when it’s done right.

Azure Data Factory: Your Pipeline Engine

At the center of the build is Microsoft Azure Data Factory. It handles data movement, scheduling, transformation, and orchestration. You can set up pipelines to pull data from over 100 sources—cloud, on-prem, SaaS—and push it into storage or other tools.

It supports both batch and near real-time workloads, so you can choose what works best for each use case. You can also plug in your own code—Python, Spark, SQL—without losing the benefits of managed infrastructure.

For most enterprise teams, ADF replaces a tangle of cron jobs, scripts, and one-off ETLs with a unified system that’s visible, repeatable, and maintainable.

Microsoft Data Lake Storage: Handle Any Data Type

Once you have the data moving, you need a place to put it. Microsoft Data Lake Storage gives you a highly scalable and secure solution for storing raw and processed data. It’s built for high-throughput analytics workloads, so it works well with tools like Azure Synapse, Databricks, and Power BI.

What makes it valuable for enterprise use is how it handles permissioning, data lifecycle rules, and massive volume without slowing down. You can separate raw, staging, and curated zones to prevent overlap and keep your transformations clean.

This setup is critical if you’re rolling out data engineering services for enterprises with multiple departments accessing different layers of the data.

Azure Integration Services: Connect Everything

Data doesn’t exist in a vacuum. Your systems—CRM, ERP, e-commerce, support platforms—have to talk to each other. That’s where Azure Integration Services come into play. You can connect Logic Apps, API Management, Event Grid, and Service Bus to move messages and data across systems in real time.

This layer makes your data engineering framework not just functional, but connected. Whether you’re syncing customer data between sales and finance or pushing updates between cloud and on-prem environments, integration is what makes the entire thing feel like one system—not 50 disconnected tools.

Security, Identity, and Access

Azure also handles identity and access management through Active Directory, role-based access control (RBAC), and managed identities. This matters more than it sounds—especially when dealing with sensitive data. You want clear, enforceable policies for who can access what, and logs to prove it.

This is where a lot of DIY data platforms fall apart. They forget governance, and then spend months trying to fix it later. With Microsoft Azure Services, those controls are baked in from day one.

Cost Control and Monitoring

It’s easy for cloud data systems to spiral out of control on cost—especially when workloads grow. Azure gives you built-in monitoring and budget alerts, plus fine-grained billing tied to services and departments. This helps IT teams stay in control without cutting corners.

You also get full observability into your pipelines, jobs, failures, and resource usage—across tools like Azure Monitor, Log Analytics, and Application Insights.

Putting it all together, here’s what an Azure-based setup for advanced data engineering looks like:

- Ingestion and integration: Azure Data Factory + Azure Integration Services

- Storage: Microsoft Data Lake Storage

- Processing: ADF, Azure Synapse, or Databricks

- Monitoring: Azure Monitor, Log Analytics

- Access and security: Azure AD + RBAC

- Governance: Lineage tracking, audit logs, data cataloging

This setup can scale from one team to the entire enterprise. And because it’s built on the same cloud services you’re likely already using, it fits into your broader IT strategy—without creating silos or new security risks.

In the next section, we’ll talk about how to get started—what steps matter most in the early stage, and how to avoid the common traps when rolling out enterprise data engineering services.

Getting Started with Data Engineering: What to Prioritize First

Rolling out data engineering services for enterprises isn’t a plug-and-play move. You can’t just hire a few engineers, spin up a cloud account, and hope the system builds itself. The early steps matter. They set the tone for whether this becomes a solid, scalable platform—or another failed project buried under budget overruns and endless rework.

Here’s where to focus early on.

Start with Use Cases, Not Tools

Don’t get trapped in the tool-first mindset. Start by listing your critical use cases: what decisions do your teams need to make with data? What reports are unreliable? What’s breaking every month?

Maybe your sales forecasts are off because pipeline data is delayed. Maybe supply chain teams don’t trust vendor performance reports. Maybe marketing is making spend decisions based on old numbers. These pain points should guide what the first round of data engineering services is built to fix.

From there, map out the data sources involved, and what kind of processing is needed. Only then should you choose the tools.

Design the Data Engineering Framework Upfront

A proper data engineering framework is like a house blueprint. It defines how raw data moves, where it’s stored, how it’s cleaned, and who gets access to what. If you skip this and just start building pipelines, you’ll regret it fast.

Start with:

- Data zones (raw, staging, curated)

- Naming conventions

- Transformation logic standards (SQL, dbt, notebooks)

- Testing and validation steps

- Security and role-based access

It doesn’t have to be perfect on day one—but you do need structure. Otherwise, your engineers will each build their own flavor of “how this should work,” and the whole thing will collapse due to inconsistencies.

Use Azure Services to Reduce Complexity

If you’re already on Azure—or heading there—this part gets easier. Services like Microsoft Azure Data Factory, Azure Integration Services, and Microsoft Data Lake Storage give you a reliable baseline to build on.

You won’t have to build connectors from scratch. You won’t need to write custom authentication wrappers for every API. You get a secure, scalable environment that fits enterprise-grade needs from day one. That means fewer edge cases, faster development, and lower long-term maintenance.

Invest in Monitoring Early

Don’t wait for things to break before you care about monitoring. Set up logging and pipeline health checks from the start. Use Azure Monitor, build Slack or Teams alerts, and make sure your team knows when something’s failing—before someone from finance sends a confused email about “why the numbers don’t match.”

Also, track data quality. Run row counts, check for nulls, test business logic. Make sure bad data can’t sneak past your processing layer.

Staff for Engineering, Not Just Analysis

A common mistake: loading up on data analysts before the pipes are ready. Analysts can’t do much if the data is delayed, dirty, or inconsistent. You need engineers first—people who know how to build and maintain systems that run every day without manual fixes.

That means hiring or training people with experience in pipeline orchestration, cloud storage, and large-scale data processing. Once the system is running cleanly, analysts will actually have something solid to work with.

For larger rollouts or aggressive timelines, it also makes sense to engage third-party data engineering service providers who’ve already done this at scale. They can bring battle-tested patterns, help accelerate delivery, and reduce risk—especially when internal bandwidth is limited

Conclusion

Data engineering isn’t about dashboards or visuals—it’s the invisible structure that makes reliable, fast, and scalable data systems possible. For enterprises, it’s what turns raw, scattered data into something that actually powers decisions, automates processes, and connects departments. The key is building a setup that holds up under pressure: one that handles volume, adapts to change, and doesn’t collapse every time a new team or product is added.

In this blog, we broke down what that system looks like. A clear data engineering framework, strong early use cases, the right tools—especially within Microsoft Azure Services—and a mix of internal engineers and third-party expertise where needed. Enterprises that get this right aren’t just collecting more data—they’re actually using it to improve how the business runs, every day. That’s where the real value is.

If your team is looking to build a system that actually scales, VBeyond Digital can help. We offer end-to-end data engineering services designed for enterprises that need real results—faster pipelines, cleaner data, and a setup built for growth.

Whether you’re building on Microsoft Azure, modernizing your stack, or starting from scratch, our experts plug in where it matters most. Get in touch today.

FAQs

Microsoft Dynamics 365 Business Central is a cloud-based ERP solution built on Microsoft Azure that covers finance, supply chain, sales, service, and project management functions. Business Central connects with other Microsoft services like Microsoft 365 and Power BI, offering tools to support day-to-day business operations in one platform.

Business Central includes modules for financial management, supply chain operations, sales and service, and project management. These modules help manage accounting, budgeting, inventory, order processing, and customer interactions, providing reporting and analysis tools through integration with Power BI. They also support tracking financial transactions and monitoring operational performance.

Microsoft offers several licensing options, such as Essentials, Premium, and Team Member licenses. The Essentials license covers core ERP functions, while Premium adds advanced features like manufacturing and service management. This subscription-based model lets companies pick a plan that fits their operational needs.

Pre-implementation planning involves documenting both functional and nonfunctional requirements, reviewing current business processes, and assessing existing systems. It includes mapping out system interactions, data flows, and performance standards. This preparation lays the groundwork for a smooth implementation and proper integration with current tools.

The system design covers setting up a secure, scalable architecture on Microsoft Azure. Configuration involves assigning user roles, setting up core modules, and defining key workflows. It also includes creating custom extensions using the AL programming language while following security and performance guidelines to support a stable ERP solution.

Data migration begins with assessing the quality of legacy data. It involves cleansing, validating, and mapping data to match the Business Central schema. Detailed steps include preparing data for transfer, addressing discrepancies, and setting rollback plans to resolve any issues during migration. This process is vital for accurate system integration.

Testing involves unit tests, system tests, and user acceptance tests to confirm each function meets required specifications. A sandbox environment is set up to simulate real-world conditions without affecting live operations. Monitoring, logging, and troubleshooting procedures help identify and fix any issues before the system is launched.

After going live, ongoing maintenance includes regular system reviews, performance monitoring, and scheduled updates. Organizations receive support through detailed documentation, training resources, and customer service channels. Feedback is continuously gathered to address any issues and adjust configurations as needed for smooth operation. Additional support options are available for further assistance if required.