Global Capability Centers as an “AI Value Factory”: operating model + outcome SLAs (not effort) for analytics, automation, and cloud ops

Section

- The blog defines an AI Value Factory for a Global Capability Center and maps business outcomes to services in the analytics operating model and GCC analytics automation portfolio.

- The blog talks about the details of a Global Capability Center operating model with product-aligned pods, shared platforms, and Site Reliability Engineering (SRE)-style measurement using Service Level Objectives (SLOs) and error budgets.

- It provides outcome based Service Level Agreement (SLA) patterns and concrete examples for analytics, automation workflow reliability, and cloud ops, grounded in measurable Service Level Indicators (SLIs).

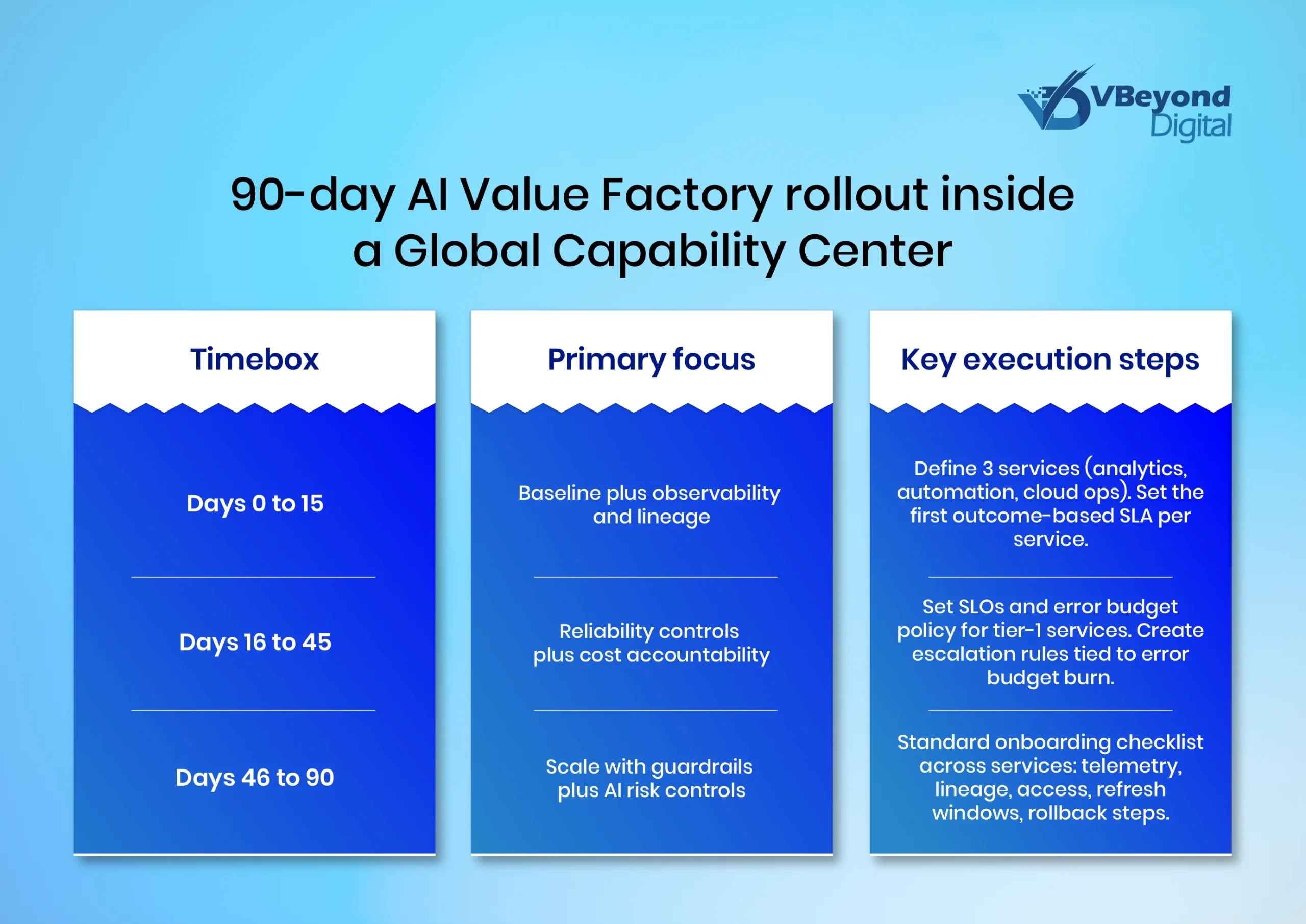

- It lays out an execution plan focused on integration complexity, scalability limits, data visibility, cost allocation, and AI risk controls, including GCC performance measurement scorecards.

CIOs and CTOs are under constant pressure to show outcomes, not activity. The business wants faster decisions from data, fewer repeat incidents in production, and lower run cost, but many delivery reports still center on effort metrics like tickets closed, sprint velocity, or hours billed. That gap becomes visible when integration complexity rises, cloud spend becomes harder to explain, and leaders cannot trace operational work to measurable business results.

This matters at scale. Property consultancy Vestian estimates there are roughly 3,200 Global Capability Centers worldwide. Separately, Mordor Intelligence estimates the Global Capability Centers market at about USD 649.16B in 2026. When a delivery model at this scale reports effort instead of outcomes, the cost of poor visibility and slow decision cycles is material.

The scope has also broadened. A Reuters report citing a Nasscom Zinnov view notes GCCs increasingly support parent companies across operations, finance, and R and D, not only back office work. That means GCC performance shows up in revenue protection, risk posture, and service reliability.

The practical answer is an AI Value Factory model: a Global Capability Center operating model that treats GCC analytics automation and cloud ops as products with outcome based SLA commitments. A service level agreement defines the expected service level and the metrics used to measure it.

VBeyond Digital’s positioning maps cleanly to this problem:It offers GCC advisory and execution plus Microsoft-centered analytics and automation services (Power BI, Power Platform) and Azure services, which are the building blocks many enterprises already run. As we say, “We bring clarity and velocity to your digital initiatives.”

What this blog will cover, end to end:

- How to define a Global Capability Center operating model around business outcomes.

- How to write an outcome-based SLA for GCC analytics automation and cloud ops.

- How to handle integration complexity, scaling pressure, data visibility gaps, and risk controls in production systems.

Build outcome SLAs with VBeyond

Start with business outcomes: Define the charter and value map

If you want a Global Capability Center to function as an AI Value Factory, start by treating analytics, automation, and cloud ops as services that exist to deliver business outcomes, not as “work” to be staffed.

That definition forces a useful question for every initiative: Which outcome improves, and how will we measure it in production?

1) Write the GCC charter in outcome language

A practical Global Capability Center operating model charter typically includes 3 to 5 measurable goals tied to business priorities:

- Decision latency: Time from source close to decision-ready metric (finance close, demand signals, risk flags).

- Operational reliability: User-visible availability and recovery time for tier-1 services.

- Run cost control: Cloud cost variance and cost attribution coverage.

- Risk controls: Audit evidence of completeness, data access policy adherence, model change approvals.

This becomes the backbone for GCC performance measurement.

2) Build a value map from outcomes to “service products”

Create a lightweight service catalog that lists what the GCC provides, to whom, and the performance targets. An IT service catalog is commonly described as a user-friendly list of IT services including items like costs and SLAs.

For GCC analytics automation, the value map usually breaks into:

- Analytics operating model services: Certified datasets, metrics layer, dashboard products, forecasting services.

- Automation services: Workflow automations with exception handling, audit trails, and clear ownership.

- Cloud ops services: Observability, incident response, patching, capacity and security operations.

Each item gets an outcome-based SLA that defines what will be delivered and how performance is measured, which is a core purpose of an SLA.

Operating model mechanics: How the GCC runs like an AI Value Factory

An AI Value Factory needs a production-grade Global Capability Center operating model: clear ownership, measurable services, and control loops that surface risk early. The goal is repeatable outcomes across GCC analytics automation and cloud ops, not ticket volume.

1) Organize around products, with platform guardrails

A workable structure for most Global Capability Centerscomprises:

- Value stream pods (product-aligned): Each pod owns a business outcome, a backlog, and a run posture.

Examples: Claims decisioning, fraud monitoring, pricing, order fulfillment reliability.

- Shared platform teams (internal products): These include data platform, integration platform, observability, cloud foundation, and security engineering.

- Reliability function (SRE-style): It defines SLI/SLO standards and error-budget policies. Google’s SRE guidance explains why SLOs should allow misses and how an error budget becomes a control mechanism for balancing reliability work with feature work.

2) Intake is a contract, not a request

Every intake item should read like an internal contract tied to an outcomebased SLA:

- Outcome statement (example: reduce decision latency for weekly pricing).

- Baseline and target metric (P50 and P95 where relevant).

- SLI definition and measurement source.

- Integration plan and data contract owners.

- Run ownership, escalation, and a review cadence.

3) Measurement backbone: SLOs, telemetry, lineage, and delivery metrics

For GCC performance measurement, combine reliability and delivery signals:

- Reliability control loop (SLO + error budget)

Feature: Define SLO targets per service, track budget burn, and pause non-critical changes when the error budget is exhausted.

- End-to-end telemetry

Feature: Instrument traces, metrics, and logs with OpenTelemetry so cross-system failures show up as a single incident path across services.

- Lineage for data visibility

Feature: Capture dataset, job, and run lineage using OpenLineage so pipeline changes have a documented blast radius and clear owners.

- Change and recovery signals (DORA / Four Keys)

Feature: Track lead time, deployment frequency, change failure rate, and time to restore service as standard signals for change risk and recovery capability.

- Audit capture for Power Platform automation

Feature: Centralized visibility into flow creation, connector calls, and flow runs or failures with identity context using Microsoft Purview activity logs for Power Automate.

Outcome SLAs: What to measure for analytics, automation, and cloud ops

An outcome–based SLA works when it reads like a contract: what service is provided, what performance is expected, how it is measured, and what happens if targets are missed. In an AI Value Factory inside a Global Capability Center, most teams also define internal SLOs (targets measured by SLIs) and use error budgets as the control loop for reliability work versus change work.

Below is a practical menu you can apply within a Global Capability Center operating model for GCC analytics automation and cloud ops. The intent is measurable outcomes, backed by telemetry and audit trails.

A) Analytics operating model: SLAs tied to decision latency and data trust

Pain point: Analytics fail silently when upstream systems change, refresh windows slip, or data quality degrades. The business experiences it as slow or wrong decisions.

Outcome SLAs that hold up in production

1. Decision readiness (freshness)

- SLI: Percentage of tier-1 metrics updated within the agreed window from the source close.

- SLO example: 99% of tier-1 metrics updated within 6 hours of source close (per business calendar).

- Instrumentation notes: SLA must match platform constraints. Power BI scheduled refresh frequency differs by capacity, and refresh duration has documented limits on shared capacity.

2. Trust and stability

- SLI: Data quality check pass rate for tier-1 datasets plus schema change detection time.

- SLO example: 99.5% pass rate on critical rules, schema changes flagged within 15 minutes of detection.

- Operational control: Incident taxonomy for “data incident” vs “report defect,” with RCA and prevention backlog.

B) Automation workflow reliability: SLAs tied to cycle time, exceptions, and auditability

Pain point: Automation that scales without governance creates new failure modes, including connector throttling, brittle rules, and ambiguous ownership when workflows fail.

Outcome SLAs for automation

- Cycle time SLA

- SLI: Median and P95 end-to-end processing time for the workflow.

- SLO example: Median under 10 minutes, P95 under 60 minutes during business hours.

- Exception rate SLA

- SLI: Percentage of items requiring human intervention.

- SLO example: Exceptions under 3%, with reason codes captured for all exceptions.

- Audit evidence SLA

- SLI: Percentage of workflow runs with complete run history and identity context retained for audit window.

- Control option: Centralized audit capture of workflow execution (including flow runs and failures), connector activity, and flow creation events, with retained identity context, supported by Microsoft Purview.

C) Cloud ops: SLAs tied to reliability, recovery, and cost accountability

Pain point: Cloud ops teams drown in alerts but cannot connect reliability, spend, and risk to products and outcomes.

Outcome SLAs that map to executive metrics

1. Availability and latency

- Define SLOs based on SLIs (request success rate, latency percentiles).

- Add an error budget policy that specifies what changes stop when the budget is burned, since error budgets are designed to balance reliability with the pace of change.

2. Recovery

- SLI: Time to restore service for priority incidents.

- SLO example: 90% of P1 incidents restored within 60 minutes, 99% within 4 hours.

3. Cost accountability

- SLI: Percentage of cloud spend allocated to products and owners (allocation coverage).

- FinOps defines allocation as apportioning cloud costs using account structure, tags, labels, and derived metadata so owners understand the costs they are responsible for.

Conclusion

A Global Capability Center becomes an AI Value Factory when it operates like a measurable service organization: clear customers, explicit service definitions, and outcomes verified in production. The core move is shifting from activity reporting to a Global Capability Center operating model anchored in an outcome-based SLA for every analytics, automation, and cloud ops service.

VBeyond Digital brings clarity and velocity to these programs by connecting the analytics operating model, GCC analytics automation, and automation workflow reliability to GCC performance measurement that business leaders can act on.

FAQs (Frequently Asked Question)

In a Global Capability Center operating model, an AI Value Factory means running GCC analytics automation and cloud ops as internal services with measurable outcomes. Each service is defined through an outcome–based SLA that states what is delivered, the performance target, how it is measured, and what happens if targets are missed, then managed using SLOs and production telemetry.

Outcome-based SLAs work better because they specify the service and expected performance in measurable terms, including how performance is verified and what actions follow if targets are missed. Effort measures like hours billed or tickets closed can increase while decision speed, reliability, or cost accountability does not improve, which weakens CIO-level governance and GCC performance measurement.

Examples include decision readiness measured as the percent of tier-1 metrics refreshed within an agreed window after source close, data trust measured as critical rule pass rates and time to detect breaking schema changes, and data visibility measured as lineage coverage so downstream impact can be assessed when pipelines change.

It improves cloud ops by grounding reliability targets in SLOs and using an error budget policy to control the balance between change work and reliability work. It also reduces incident response blind spots by standardizing telemetry collection across traces, metrics, and logs, improving correlation across distributed services.

Start by selecting a small set of high-impact services and writing one outcome–based SLA for each, including the SLI, target, and measurement source. Then instrument those services so the SLA can be verified from production signals, with early standardization of telemetry to surface integration and scaling issues faster.