Agentic AI for Data Engineering: Scaling Intelligence Beyond Pipelines

Section

Table of Contents

- Where Traditional Pipelines Fall Short

- What Agentic AI Brings to Data Engineering

- The Critical Role of Data Observability

- Key Use Cases CIOs and CTOs Care About

- Business Impact: Metrics That Matter

- Practical Adoption Roadmap

- VBeyond Digital: Your Data Engineering Partner

- Conclusion

- FAQs ( Frequently Asked Questions)

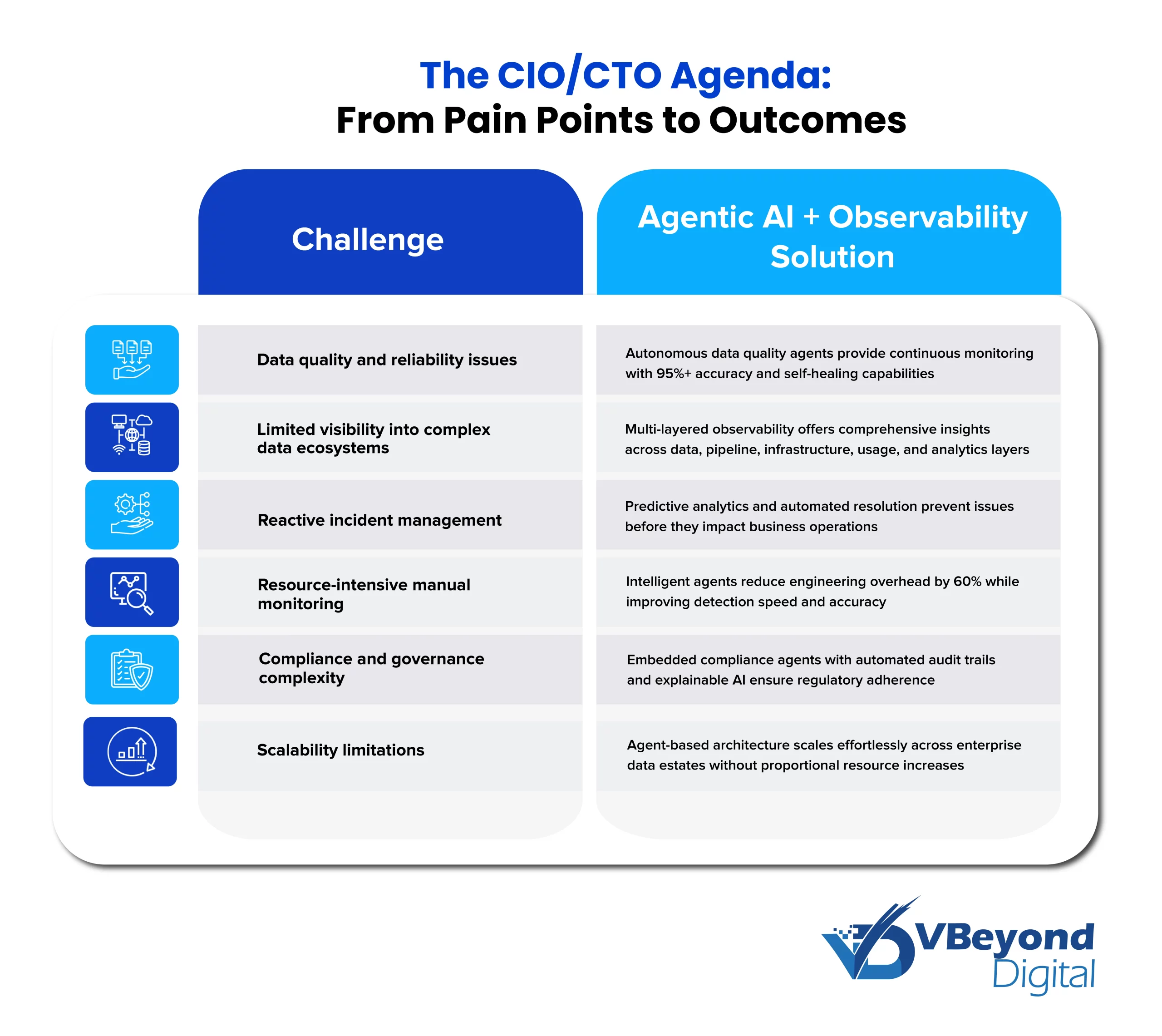

• Traditional data pipelines are breaking down under modern complexity, making them costly, rigid, and slow to adapt while causing data quality issues that erode business confidence.

• Agentic AI introduces self-managing, adaptive data systems that collaborate across ingestion, validation, and delivery while continuously optimizing without human intervention.

• Data observability is the foundation enabling intelligent monitoring across quality, lineage, anomalies, performance, and governance to create resilient, reliable ecosystems.

• The business impact is transformative with up to 60% less engineering effort, faster analytics delivery, stronger compliance, and scalable, self-healing operations that future-proof enterprises.

Traditional data pipelines are failing to keep pace with modern business demands. These rigid, maintenance-heavy systems create operational bottlenecks that prevent organizations from capitalizing on their data investments. For CIOs and CTOs facing escalating data complexity, integration challenges, and mounting infrastructure costs, the status quo is no longer sustainable.

Agentic AI represents a fundamental shift from reactive pipeline management to intelligent, autonomous data systems that collaborate, adapt, and continuously optimize without constant human intervention. In addition to deploying smarter models, this shift is about creating self-managing data ecosystems that reduce operational drag while accelerating decision-making capabilities across the enterprise.

Where Traditional Pipelines Fall Short

Modern enterprises grapple with an ever-expanding variety of data sources spanning structured databases, semi-structured APIs, and unstructured content streams. Traditional ETL pipelines require constant manual reconfiguration when schemas change or new data sources emerge, creating significant operational overhead that consumes up to 44% of data engineers’ time and costs companies approximately $520,000 annually.

The scalability challenge is particularly acute. According to a survey of technical C-suite executives by BCG, almost 50% respondents said that 30% of large-scale technology projects in their organizations are overrun on time and budget. When customer identification fields transition from numeric to alphanumeric formats or when new columns appear in source systems, conventional pipelines often fail catastrophically, requiring extensive manual intervention to restore functionality.

Operational Visibility and Business Impact

Traditional pipeline architectures provide limited real-time insight into data health and quality. Organizations experience 67 monthly data incidents requiring an average of 15 hours to resolve, a 166% increase from 2022’s 5.5-hour average. This degradation reflects the growing complexity of distributed data architectures where 47% of newly created data records contain critical work-impacting errors.

The business consequences are severe. Data quality issues cost organizations 31% of their revenue, while 68% of companies require 4+ hours just to detect data quality problems. This detection lag compounds business impact before any remediation can begin, undermining confidence in data-driven decision-making processes.

Build smarter data pipelines for consistent, high-performing systems.

What Agentic AI Brings to Data Engineering

Autonomous Execution and Adaptive Systems

Agentic AI systems operate with high degrees of autonomy, making decisions, learning from interactions, and executing complex tasks with minimal human intervention. Unlike traditional rule-based automation, these systems can understand schema semantics, infer data relationships, and orchestrate workflows dynamically.

When CSV files gain new columns or API endpoints change data formats, agentic systems automatically modify transformation logic and resume processing without causing downstream failures. This adaptive capability extends to intelligent scheduling that adjusts execution based on context. If data sources are delayed, pipelines pause or reroute automatically rather than failing.

Collaborative Multi-Agent Architecture

Modern agentic systems employ multiple specialized agents that coordinate across ingestion, validation, metadata management, and delivery functions. This collaborative mesh architecture replaces linear pipeline dependencies with intelligent orchestration where agents communicate, share context, and make coordinated decisions.

Each agent focuses on specific domains—one might handle schema evolution while another manages data quality validation. This specialization enables faster problem resolution and more accurate responses compared to monolithic systems attempting to handle all scenarios.

Outcome-Focused Intelligence

Agentic AI shifts the paradigm from “moving data” to “accelerating decisions.” These systems function as virtual pair programmers for data teams, providing decision intelligence for pipeline design, suggesting schema optimizations, auto-tracking lineage, and troubleshooting failures. The result is higher adaptability and responsiveness compared to static rule-based systems.

The Critical Role of Data Observability

Foundation for Intelligent Data Operations

Data observability is the cornerstone that enables agentic AI systems to operate effectively. While traditional monitoring focuses on external metrics like system uptime, agentic data observability provides comprehensive visibility into data health, pipeline performance, and business impact across the entire data ecosystem.

Enterprise data observability addresses five key pillars that are essential for agentic AI systems:

Data at Transit

External Integrations

Treat partners as part of your cryptography. Publish minimum crypto requirements for ISVs, connectors, Logic Apps, and custom integrations, including supported algorithms, hybrid expectations, and rollback rules. Maintain a compatibility matrix across key interfaces (ERP feeds, finance reporting, customer portals) and gate releases on interop tests that include handshake negotiation and certificate validation. Fold vendor readiness into procurement and renewals; contracts should specify PQC timelines, support levels, and evidence of testing.

| Observability Pillar | Agentic AI Enhancement |

|---|---|

| Pipeline Performance Monitoring | Autonomous agents track throughput, latency, resource utilization, and system health with intelligent optimization |

| Data Quality Monitoring | AI agents continuously validate accuracy, completeness, consistency, and timeliness with real-time anomaly detection |

| Data Lineage & Traceability | Agents automatically map data flows, perform impact analysis, and enable root cause analysis across complex pipelines |

| Collaboration & Incident Management | Automated alerts, cross-team coordination, and post-incident analysis for continuous improvement |

| Anomaly Detection & Predictive Analytics | Context-aware detection using ML models that understand business operations and predict issues before they occur |

Multi-Layered Observability Architecture

Modern agentic systems require multi-layered observability that extends beyond traditional monitoring approaches. This comprehensive framework includes:

• Data Quality Layer: AI agents monitor data content for accuracy, completeness, and freshness, detecting anomalies and schema changes in real-time.

• Pipeline Layer: Agentic systems observe ETL/ELT processes, tracking data flows and job status while enabling predictive maintenance that prevents failures before they occur.

• Infrastructure Layer: Autonomous monitoring agents analyze platform performance across cloud data warehouses, databases, and compute clusters, ensuring optimal resource utilization.

• Usage Layer: AI agents track query performance, user behavior, and costs, providing insights that optimize both technical performance and business value.

• Analytics/BI Layer: Intelligent monitoring validates last-mile outputs, ensuring dashboards and reports maintain data integrity throughout the delivery process.

Key Use Cases CIOs and CTOs Care About

Intelligent Real-Time Governance

Agentic AI transforms data governance from reactive compliance to proactive intelligence. AI-driven monitoring agents provide continuous data quality and compliance oversight, automatically flagging potential issues before they impact business operations. These systems learn from feedback to improve validation accuracy over time while maintaining auditable metadata across all pipeline stages.

Autonomous data quality agents like those deployed by leading enterprises can reduce data quality issues by up to 95% accuracy while providing 24/7 surveillance without human intervention. One leading alcohol beverage manufacturer deployed agentic AI for automating data quality checks and lineage tracking, where specialized agents validated file schemas, flagged anomalies, and generated actionable recommendations, significantly reducing manual intervention requirements.

Frictionless Migration and Self-Healing Operations

Google Cloud’s Spanner Migration Agent exemplifies production-grade agentic systems that reduce manual code refactoring and minimize downtime during complex database migrations. These agents handle schema conversion, connectivity configuration, and incremental data synchronization while maintaining data integrity throughout the migration process.

Predictive maintenance agents analyze pipeline metrics to forecast potential failures, enabling proactive interventions that prevent costly disruptions. Machine learning models trained on historical performance patterns can distinguish between expected variations and genuine anomalies, significantly reducing false positives while improving response times.

Self-Service Capabilities and Intelligent Experimentation

Agentic systems enable product and analytics teams to request data without bottlenecking engineering resources. Natural language interfaces allow business users to describe requirements conversationally, with AI systems automatically translating these requests into appropriate technical implementations.

AI agents can rapidly spin up and adapt to new data models for product testing, dramatically reducing the time from concept to insight delivery. This self-service capability extends to intelligent resource management where agents automatically adjust computational resources based on current data volume and processing requirements.

Autonomous Anomaly Detection and Resolution

Advanced agentic systems provide context-aware detection that understands business operations to fine-tune detection algorithms, ensuring only meaningful anomalies trigger alerts. These systems move from reactive to proactive monitoring by using predictive analytics to forecast potential data issues before they occur.

An AI-powered observability platform connected to intelligent sensors can detect unexpected changes before they escalate while providing automated root-cause analysis that accelerates resolution by identifying upstream issues in minutes.

Business Impact: Metrics That Matter

Operational Efficiency and Cost Reduction

Early adopters report up to 60% reduction in engineering hours spent on repetitive pipeline work, while organizations experience 30-35% faster delivery of analytics and business reporting. These improvements stem from intelligent automation that handles routine tasks while enabling human resources to focus on strategic initiatives.

Automation and strong data governance frameworks can reduce manual workload and cut data management costs by up to 45%, while organizations typically achieve 3X ROI through better forecasting, optimized operations, and enhanced customer targeting.

Measurable Reliability Improvements

AI-powered data observability platforms significantly reduce Mean Time to Detect (MTTD) and Mean Time to Resolve (MTTR). Organizations implementing comprehensive agentic observability report:

- Over 95% detection accuracy for critical data quality issues

- Proactive issue prevention that addresses problems before they impact business operations

- Automated incident resolution that reduces manual intervention by up to 50%

Risk Mitigation and Compliance

Continuous monitoring agents flag quality and compliance issues early, reducing audit exposure and regulatory risks. These systems maintain comprehensive audit trails and ensure explainability requirements are met, critical for regulated industries requiring strict compliance frameworks.

Data-related outages cost organizations millions each year, making proactive observability a critical investment for enterprise risk management.

Practical Adoption Roadmap

Phase 1: Foundation with Intelligent Monitoring

Begin by implementing observability-first infrastructure using tools like OpenLineage and Great Expectations enhanced with AI-powered agents. These systems provide the foundational visibility required for agentic systems to understand normal operational patterns and detect deviations.

Deploy autonomous monitoring agents that can analyze vast data streams, detect anomalies, and make informed decisions in real time. Start with high-impact pipelines that experience frequent failures or require heavy manual oversight.

Phase 2: Collaborative Agent Deployment

Introduce specialized agents for schema changes and intelligent scheduling, gradually expanding capabilities as confidence in system performance grows. Connect agents across ingestion, metadata management, and delivery functions to create comprehensive autonomous workflows.

Establish governance frameworks with explainability and audit trails to maintain trust while enabling increasing levels of autonomous decision-making. This phase focuses on building multi-agent systems where specialized agents coordinate and share context.

Phase 3: Enterprise-Scale Autonomous Operations

Scale across functions to extend agentic capabilities from IT to product development, finance, HR, and other business domains. This expansion leverages the collaborative nature of multi-agent systems to address cross-functional data requirements.

Implement predictive and prescriptive analytics where agentic systems not only detect issues but automatically resolve them through self-healing pipelines and intelligent resource optimization.

Establish governance frameworks with explainability and audit trails to maintain trust while enabling increasing levels of autonomous decision-making. This phase focuses on building multi-agent systems where specialized agents coordinate and share context.

VBeyond Digital: Your Data Engineering Partner

VBeyond Digital brings proven expertise in Microsoft-centric digital transformation with deep proficiency in automation, analytics, and observability technologies. Their approach focuses on outcome-first engagement with clarity on metrics like time-to-insight, engineering hours saved, and compliance readiness.

Rather than rip-and-replace approaches, VBeyond Digital specializes in data engineering, integrating agentic AI and observability frameworks within existing data estates, ensuring seamless adoption that builds on current infrastructure investments. Our measured risk approach through pilot programs enables organizations to scale based on demonstrated business proof rather than theoretical benefits.

With accredited processes and deep Microsoft proficiency, VBeyond Digital ensures implementations are both technologically sound and strategically aligned with organizational vision. This foundation supports the transition from concept to production-grade agentic systems that deliver measurable business outcomes.

Conclusion

The convergence of agentic AI and data observability represents the next evolution in data engineering. Gartner predicts that 33% of enterprise software applications will include agentic AI by 2028.

Organizations that combine these capabilities early are establishing intelligent, self-managing data ecosystems that operate with unprecedented efficiency, reliability, and scalability. The result is a proactive data reliability system that finds and fixes problems before they impact business decisions, dashboards, or AI models.

For CIOs, CTOs, and product leaders, the opportunity is clear: reduce operational drag, accelerate insight delivery, and build adaptive data foundations that scale with business growth. The question is no longer whether to invest in agentic AI and data observability, but where to begin applying these technologies for measurable business outcomes.

The enterprises that embrace this convergence today will define the competitive landscape of tomorrow—operating with intelligent, self-healing data systems while their competitors struggle with increasingly complex manual processes and reactive monitoring approaches.

FAQs (Frequently Asked Question)

Agentic AI refers to intelligent, autonomous systems that manage data operations with minimal human input. In data engineering, it adapts to schema changes, validates data quality, and coordinates multiple tasks, reducing manual intervention and keeping pipelines running smoothly.

Yes. By automating repetitive fixes, handling schema changes, and self-correcting errors, Agentic AI can cut maintenance hours by up to 60%. This lowers the cost of data engineering while improving reliability and uptime.

Several AI-powered observability platforms offer mobile dashboards and alerts. These tools let teams track pipeline health, data quality, and anomalies in real time from their phones, making it easier to respond quickly to incidents.

Agentic AI data engineering focuses on autonomous, adaptive systems that continuously learn and adjust. Unlike rigid pipelines that break when data formats change, agentic systems detect issues, adjust logic automatically, and keep workflows running without requiring constant reconfiguration.

Self-healing pipelines detect errors early and automatically adjust transformations, reroute data, or pause execution until sources are ready. This prevents failures from cascading downstream and significantly reduces the need for manual fixes.