The AI Accountability Scorecard: A Power BI dashboard that ties Copilot usage to KPIs (revenue, cycle time, CSAT)

Section

Table of Contents

- Why “Copilot usage” alone does not prove business impact

- Scorecard definition and measurement principles

- Data sources: Copilot signals you can measure now

- Data model: How to join Copilot signals to revenue, cycle time, and CSAT

- Platform architecture: Power BI and Fabric patterns for scale and control

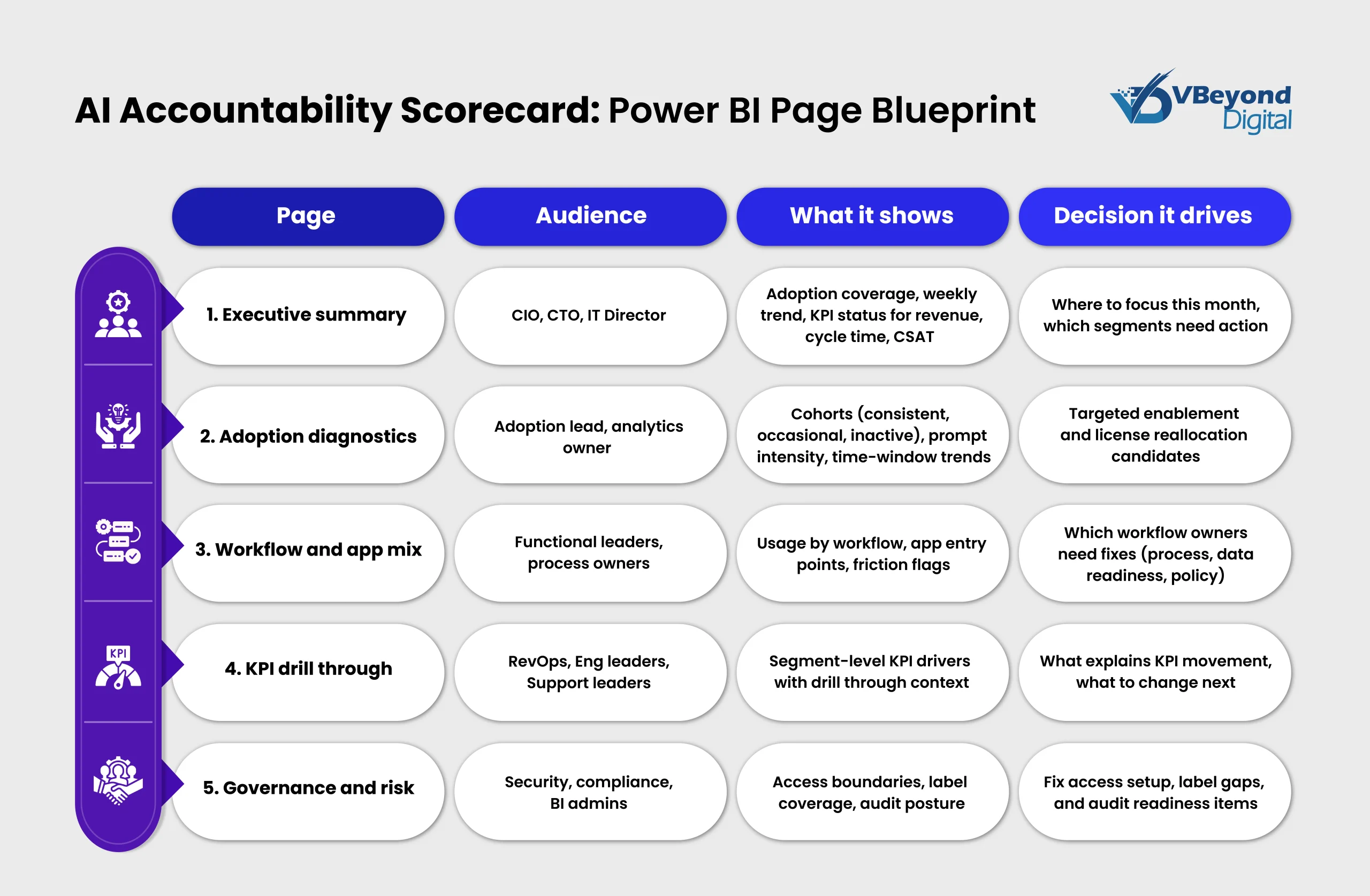

- Scorecard pages in Power BI: What executives see and what operators use

- Conclusion: From Copilot adoption signals to measurable outcomes

- FAQs (Frequently Asked Question)

- A Power BI scorecard that ties Microsoft 365 Copilot adoption to revenue throughput, delivery cycle time, and CSAT (Customer Satisfaction Score).

- Pulls signals from the Microsoft 365 Copilot usage report and Copilot Chat usage report, with awareness of reporting latency.

- Models usage and KPIs at a weekly level, then compares cohorts and matched groups to separate signal from noise.

- Adds controls for access and information protection using Power BI row-level security and Purview sensitivity labels.

Most Copilot rollouts start with the right intent and end with the wrong question. Leaders ask, “Are people using it?” when the decision they actually need to make is, “What measurable business result is our Copilot spend driving, and where should we act next?”

The AI Accountability Scorecard is a Power BI view that connects Microsoft 365 Copilot adoption signals to outcomes you already manage: revenue throughput, cycle time, and Customer Satisfaction Score (CSAT). It is built to support decisions, not curiosity. In practice, it gives CIOs, CTOs, IT Directors, Product Heads, and Transformation Leaders a single place to answer four recurring leadership needs:

- Where Copilot is becoming a habit: Adoption that stays stable week after week, segmented by role, function, and region.

- Where adoption is stalling: Teams with licenses but low active usage, or teams with short bursts of activity that fade.

- Where business KPIs are moving: Revenue cycle, delivery speed, and customer outcomes analyzed alongside adoption patterns.

- What to do next: Specific actions tied to root causes, such as training gaps, workflow friction, data quality problems, or access constraints.

This matters because work patterns in Microsoft 365 are already under strain. Microsoft reports that employees are interrupted every two minutes during core work hours by meetings, email, or chat, calculated from “pings” data for the top 20% of users by ping volume received. If Copilot is expected to help teams regain capacity, the proof has to show up in the KPIs that reflect throughput and service quality, not just in counts of prompts.

Why “Copilot usage” alone does not prove business impact

Copilot usage metrics are necessary, but they are not a business case on their own. They tell you that people are interacting with Copilot, not whether those interactions are shifting revenue throughput, delivery cycle time, or customer experience. If leadership decisions are tied to usage alone, the program tends to swing between overconfidence and unnecessary pullbacks.

Here is the core problem: Copilot reporting surfaces adoption, retention, and engagement signals, but those signals are upstream of business outcomes. Microsoft describes the Microsoft 365 Copilot usage report as offering insights into adoption, retention, and engagement, noting that it is being continually enhanced. This is the right starting point for a scorecard, but it is not the finish line.

Why usage can mislead leaders

1) Activity counts do not equal workflow change

Prompts submitted and active users can rise even when teams keep the same operating model. In that pattern, Copilot becomes an extra step, not a measurable shift in how work moves from request to completion. You will see activity growth without durable cycle time movement.

2) Reporting latency can create false signals in daily views

Microsoft states that for Copilot activity on a given day, the report becomes available typically within 72 hours of the end of that day Coordinated Universal Time (UTC). Separately, Microsoft also documents that Copilot reporting can have up to 72 hours latency once available.

What this means in practice:

- A “drop” you see in the last two or three days may be a reporting delay, not a real adoption decline.

- Daily operational decisions based on near real-time usage can be noisy. Weekly rollups reduce this noise and align better with KPI cadence.

3) Usage windows are time-bounded, so comparisons need a fixed method

Microsoft usage reports commonly support 7, 30, 90, and 180-day views. If one leader reports “last 30 days” and another reports “last 7 days,” the conversation becomes subjective. A scorecard needs a fixed reporting convention, for example, a weekly adoption trend plus a 30-day trailing view for consistency.

4) Correlation is not causation

High-performing teams often adopt earlier. If you only compare “high usage” teams to “low usage” teams, you may simply measure differences in leadership, skills, or process maturity. This is why impact measurement should start with cohorts and matched comparisons, then move toward stronger designs once your data is stable.

Align Copilot adoption to KPIs

Scorecard definition and measurement principles

The AI Accountability Scorecard is a Power BI view that connects Microsoft 365 Copilot adoption signals to business KPIs, using consistent definitions and repeatable time windows so leadership can make decisions with confidence. It is built to answer outcome-led questions, such as where Copilot use is becoming consistent, which workflows show measurable movement, and what action to take next.

Microsoft positions the Microsoft 365 Copilot usage report as a summary of user adoption, retention, and engagement with Microsoft 365 Copilot and its enabled apps. It also states that Copilot activity for a given day is typically available within 72 hours of the end of that day (UTC). Those two details are central to the scorecard design because they define what the signals mean and how quickly you can act on them.

A. The metric contract: Precise definitions leaders can use

Your scorecard should adopt Microsoft’s metric meanings, then keep them stable over time:

- Enabled Users: Total unique users in your organization with Microsoft 365 Copilot licenses over the selected timeframe.

- Active Users: Enabled users who tried a user-initiated Microsoft 365 Copilot feature in one or more Microsoft 365 apps over the selected timeframe.

- Active users rate: Active users divided by enabled users.

- Total prompts submitted: Total prompts users sent to Microsoft 365 Copilot Chat during the selected timeframe.

- Average prompts submitted per user: Mean prompts each active user sent to Microsoft 365 Copilot Chat during the selected timeframe.

This contract stops common reporting drifts, such as teams mixing license assignment counts with activity counts, or reporting “usage” without stating the time window.

B. Time windows and freshness rules: How to avoid misleading trends

Microsoft states the Copilot usage report can be viewed over the last 7, 30, 90, or 180 days. This supports a scorecard approach where:

- Weekly rollups drive operational reviews.

- A trailing 30-day view supports leadership reporting.

- 90 and 180-day views support license allocation and adoption durability analysis.

Because Microsoft also states activity is typically available within 72 hours (UTC), the scorecard should treat the most recent few days as incomplete. A practical rule is to run executive trend views at weekly grain and label the latest partial week clearly.

C. Segmentation is not optional

Enterprise value shows up in specific roles and workflows, not in an org-wide average. The scorecard should segment by:

- Function and role family (sales, support, engineering, finance)

- Region and time zone (to explain collaboration load and meeting intensity)

- Manager chain and business unit (to tie adoption to accountable owners)

- Workflow ownership (so process fixes have a clear home)

This is where many programs stall. Without HR and org attributes, you can see “active users”, but you cannot identify where to focus on enablement or process changes.

D. Causality discipline: How to connect adoption signals to KPI movement

The scorecard should treat Copilot usage as a leading indicator, then test relationships to KPIs using a staged approach:

- Start with cohort trend analysis (consistent users vs occasional users vs non-users).

- Add matched comparisons (teams with similar workload and role mix, different adoption levels).

- Use before-and-after windows aligned to adoption ramp dates, with controls for seasonality and staffing shifts.

- Move to stronger causal designs, such as difference-in-differences, only once your data model is stable.

The goal is not to “prove” AI impact from a single chart. The goal is to give leaders a repeatable method to decide where to invest, where to intervene, and where the operating model requires change.

Data sources: Copilot signals you can measure now

A scorecard is only as credible as the telemetry behind it. If your goal is to connect Copilot adoption to revenue throughput, delivery cycle time, and CSAT, you need data sources that are stable, permissioned, and refresh on a cadence your leadership can run.

Microsoft gives enterprises four practical signal layers you can join into a Power BI model:

- Admin center usage reporting for adoption signals

- Copilot Chat usage reporting for unlicensed activity

- Microsoft Graph usage report APIs for automated ingestion

- Microsoft Purview audit logs for governance and investigation

Below is what each source provides and where it fits in the scorecard.

1. Microsoft 365 admin center: Microsoft 365 Copilot Chat usage report

What this enables your business to do –It helps measure Copilot Chat adoption outside the paid Copilot license population, which is critical when business stakeholders ask why “Copilot usage” looks low despite broad interest.

What Microsoft states about the scope – Microsoft documents that the Copilot Chat usage report is currently limited to users without a Microsoft 365 Copilot license who interact with Copilot Chat across several entry points, including Teams, Outlook, Microsoft Edge, Word, Excel, PowerPoint, and OneNote.

How to access the report – Reports > Usage > Microsoft 365 Copilot > Copilot Chat

Metrics and details available – The report includes:

- Active users, average daily active users, total prompts submitted, and average prompts submitted per user.

- Trends for 7, 30, 90, and 180-day periods.

- User-level table fields such as prompts submitted, active days, and last activity date (UTC).

- Additionally, user-level data is anonymized by default, and export is available as a CSV.

Where this data fits in the accountability model –

- It helps separate two cases that often get mixed up:

- Low licensed Copilot activity

- High unlicensed Copilot Chat activity

- This distinction matters for licensing decisions and for targeting enablement by role.

2. Microsoft Graph: Copilot usage report APIs for automated ingestion

What this enables your business to do – It helps move from manual exports to a scheduled ingestion path into your analytics store, so your Power BI model refreshes with fewer human steps.

The key constraint Microsoft documents – Microsoft states that this API only returns usage data for users who have a Microsoft 365 Copilot license. Unlicensed Copilot Chat usage data is not available through Microsoft Graph reports APIs.

API maturity and change risk – Microsoft states that APIs under the /beta version are subject to change and use in production applications is not supported.

This does not block analytics programs, but it does change how you design the pipeline. Treat the ingestion path as a managed dependency with monitoring and fallbacks.

Technical details that matter for a scorecard

- The Graph user detail endpoint supports reporting periods such as D7, D30, D90, D180, plus ALL for combined output.

- Microsoft notes a direction to use Copilot usage APIs under the /copilot URL path segment going forward.

- Permissions are based on Reports. Microsoft lists administrator roles that are required for delegated access to read usage reports.

Where this data fits in the accountability model

- Graph ingestion supports repeatability and auditability for scorecard refresh.

- It also supports joining user-level signals to your HR dimensions and KPI fact tables at a weekly grain.

3. Microsoft Purview: Audit logs for Copilot and AI applications

What this enables your business to do –It helps add governance and investigation capability alongside adoption measurement. This is important for security teams and for executive confidence when Copilot adoption scales.

What Microsoft documents – Microsoft states that audit logs are generated for user interactions and admin activities related to Microsoft Copilot and AI applications, and that the system automatically logs these activities as part of Audit (Standard). If your organization enables auditing, you do not need extra steps to configure auditing support for Copilot and AI applications.

Microsoft also states that audit records include details about who interacted with Copilot, when and where the interaction occurred, and references to files, sites, or other resources accessed to generate responses.

Why this matters for the scorecard

- Usage metrics tell you “how much.” Audit data helps answer “what happened” in a specific scenario.

- For regulated teams, audit data supports investigations and policy reviews without turning your KPI dashboard into a security console.

Note on non-Microsoft AI apps – Microsoft documents pay-as-you-go billing for audit logs for non-Microsoft AI applications and states those logs are retained for 180 days. This is relevant if your organization tracks Copilot alongside other AI tools in a shared governance model.

Data model: How to join Copilot signals to revenue, cycle time, and CSAT

A good accountability scorecard depends on a data model that business leaders can trust and data teams can maintain. The goal is simple: connect Copilot adoption signals to outcomes without creating brittle joins, unclear definitions, or confusing grain mismatches.

Start with a star schema that is built for slicing and KPI joins

Microsoft’s Power BI guidance recommends a star schema approach where dimension tables describe business entities and fact tables store measurements. This structure supports filtering and grouping cleanly and keeps models understandable at scale.

For this scorecard, plan for multiple fact tables that share the same conformed dimensions, instead of forcing every KPI into a single wide table.

Core dimensions (conformed)

- DimTime: Weekly and monthly keys, fiscal periods, calendar attributes

- DimUser: User identity keys plus role family, location, manager chain

- DimOrg: Business unit, cost center, product line, region, team

- DimWorkflow: Your internal workflow taxonomy (for example: sales qualification, incident triage, release notes)

Core fact tables

- FactCopilotUsageWeekly: Adoption and interaction measures at weekly grain

- FactRevenueWeekly: Pipeline movement, bookings, revenue recognized at weekly grain

- FactCycleTimeWeekly: Delivery metrics (lead time, PR cycle time, release cadence) at weekly grain

- FactCSATWeekly: CSAT, first response time, time to resolution, reopen rate at weekly grain

Ingest Copilot usage with stable keys and clear time semantics

Microsoft notes that the Microsoft 365 Copilot usage report can be exported to a CSV from the report page and includes a user table showing each Copilot-enabled user’s last activity date across Copilot apps.

For Copilot Chat, Microsoft documents a detailed user activity table with “last activity date” fields by app—it explicitly labels those dates as UTC. It also states the last activity date remains fixed even if the report timeframe changes.

These details shape the model:

- Keep raw ingested timestamps in UTC as stored values.

- Build reporting week boundaries explicitly (for example, ISO week, or your fiscal week).

- Treat “last activity date” as a point-in-time attribute, not a weekly measure.

Treat org attributes as first-class data, not report decoration

Org attributes make the scorecard actionable. Microsoft’s Viva Insights documentation explains that missing or low-quality organizational data can result in incomplete insights and identifies which missing fields are causing data gaps.

Microsoft also documents that Viva Insights can receive organizational data through Microsoft Entra ID by default, or through an uploaded organizational data file.

The practical implication: if your Entra ID profile data does not contain the segmentation fields leaders need, you will need an HR-fed org dimension that is kept current and historically accurate.

Build DimOrg as effective-dated so KPI history remains correct

When teams reorganize, a scorecard that overwrites org attributes will misstate history. A Type 2 slowly changing dimension pattern preserves history by creating a new row version when attributes change.

Microsoft Fabric documentation describes implementing Slowly Changing Dimension Type 2 and calls out the need to detect new or changed records as part of the design.

For DimOrg, this means:

- Store ValidFrom and ValidTo (or equivalent) dates per org assignment.

- Join usage and KPI facts to the DimOrg row that was valid for that week.

Handle many-to-many relationships with explicit bridges

Users often belong to multiple teams, products, or initiatives. Power BI provides specific modeling guidance for many-to-many scenarios, including patterns for designing these relationships successfully.

In practice:

- Use a BridgeUserOrg table if a user can belong to multiple org units in a period.

- Use a BridgeUserWorkflow table if workflows are owned by multiple teams.

Platform architecture: Power BI and Fabric patterns for scale and control

A Copilot accountability scorecard becomes a long-running management tool only when the platform design can support three needs at the same time: scale (many users and many data sources), refresh reliability (consistent updates without manual steps), and controlled access (leaders see what they need, and sensitive data stays protected).

Microsoft Fabric and Power BI can support this pattern when you treat the scorecard as a governed analytics product, not a one-off report.

1. Data layer: OneLake as the system of record for analytics tables

What this enables your business to do – It helps keep Copilot signals and KPI data in a single logical analytics store, so Power BI semantic models stay consistent across teams and refresh cycles.

Microsoft describes OneLake as a single, unified, logical data lake that comes automatically with every Microsoft Fabric tenant and is designed to be the single place for analytics data. Microsoft also states that each tenant gets one unified OneLake with a single file-system namespace.

Practical implications for the scorecard:

- You can land Copilot usage extracts or API ingested data into OneLake tables, alongside CRM, delivery, and support KPI tables.

- Teams can work from one set of curated tables, instead of copying data into separate workspaces or separate report-owned datasets.

A proven structuring method for scorecard data – Microsoft documents a medallion lakehouse approach in Fabric, using layered tables for staged, refined, and curated data. For the AI Accountability Scorecard, a practical mapping is:

- Bronze (raw landing)

- Copilot usage exports, Copilot Chat exports, KPI exports from source systems

- Minimal changes other than schema standardization and timestamp parsing

- Silver (conformed and validated)

- Identity mapping (UPN to HR ID), effective-dated org attributes

- Standard week keys and fiscal period keys

- Data quality checks that flag missing org fields that would break segmentation

- Gold (scorecard-ready)

- Weekly fact tables for Copilot usage and KPIs

- Conformed dimensions (time, org, user, workflow) used by Power BI

This structure reduces report fragility because the Power BI layer reads stable tables, while ingestion and source changes are handled upstream.

2. Semantic layer: Direct Lake for high-volume scorecard models

What this enables your business to do – It helps run interactive Power BI dashboards over large Delta tables without importing full copies of data into every semantic model refresh cycle.

Microsoft explains that Direct Lake semantic models differ from Import mode in how refresh works. Import mode replicates the data and creates a cached copy for the semantic model. Direct Lake refresh copies only metadata, described by Microsoft as framing, which can complete in seconds.

Microsoft also describes the query flow in Direct Lake mode: when a Power BI report visual requests data, the semantic model accesses the OneLake Delta table to return results, and it can keep some recently accessed data in cache for efficiency.

Design choices that matter for this scorecard:

- Use Direct Lake for high-volume fact tables such as weekly Copilot usage by user and weekly KPI facts, especially when the audience expects fast filtering by org, region, and role.

- Keep the semantic model thin and predictable. Push heavy shaping and joins into the curated tables in OneLake, allowing the model to focus on measures, relationships, and security rules.

- Plan Delta table layout with query behavior in mind. Microsoft notes Direct Lake performance depends on well-tuned Delta tables for efficient column loading and query execution.

Where Import or DirectQuery still fits – Power BI supports multiple storage modes, and the right choice depends on latency and source constraints. Microsoft documents that table storage modes control whether Power BI stores data in memory or retrieves it from the source when visuals load. In many enterprises, the scorecard ends up as a composite:

- Direct Lake for curated OneLake tables.

- Import for small reference dimensions that rarely change.

- DirectQuery only when a source cannot be landed into OneLake within required governance boundaries.

3. Access control and governance: RLS, workspace roles, and sensitivity labels

What this enables your business to do – It gives executives and leaders the visibility they need while keeping data exposure aligned to responsibility, region, and policy.

Row-level security and workspace roles

Microsoft states that row-level security does not apply to workspace users who have edit permission for the semantic model. Specifically, the workspace roles of Admin, Member, and Contributor are not subject to RLS in that workspace context. If you want RLS to apply to people in a workspace, Microsoft advises assigning them the Viewer role.

For a scorecard used by leaders across business units, this typically leads to a clear pattern:

- Keep report builders and model owners as Admin, Member, or Contributor.

- Provide the leadership audience access through Viewer role, published app access, or explicit sharing, depending on your governance model. Microsoft notes the Viewer role can be used to enforce RLS for users who browse content in a workspace.

Information protection with sensitivity labels

Microsoft documents that Power BI supports sensitivity labels from Microsoft Purview Information Protection, and it describes how labels function in Power BI. Microsoft also documents how to enable sensitivity labels at the tenant level in the Fabric admin portal under tenant settings for information protection.

For an accountability scorecard, this matters because the model often contains:

- User-level adoption signals

- Org structure and role attributes

- Customer KPI data such as CSAT and support performance

A practical governance setup includes the following:

- Label the semantic model and report artifacts according to your data classification policy.

- Limit export and reshare paths based on label policy, in line with Purview configuration.

- Keep access review tied to workspace role assignments, since role choice affects whether RLS applies.

Scorecard pages in Power BI: What executives see and what operators use

A Copilot accountability scorecard works when each page answers a single decision question. Executives need a short, stable view of adoption and KPI movement. Operators need diagnostic depth so they can act without guessing. Power BI supports both patterns, including scorecards that track goals against key business objectives in one place.

Page 1: Executive summary (decision view)

Primary question: Where is Copilot adoption consistent, and where are the KPIs moving?

Design this page to load fast and read quickly:

- Adoption coverage: Enabled Users, Active Users, and Active User rate, shown with a 30-day trailing view for leadership rhythm. These are defined in Microsoft’s Copilot usage reporting.

- Consistency trend: Weekly active trend line, plus a “consistent users” cohort count (for example, active in 4 of the last 6 weeks).

- KPI scorecard tiles: Revenue throughput, delivery cycle time, CSAT, each with a clear target and a current value. Power BI scorecards let you track goals and connect them to report visuals, helping tie each KPI tile to its underlying evidence.

- Next actions panel: Three actions tied to the biggest constraints detected that month (training gap, workflow owner needed, data quality fix, access boundary issue).

Page 2: Adoption and retention diagnostics (adoption view)

Primary question: Is adoption growing in the right roles, and is it sticking?

Include:

- Time-window selector aligned to Microsoft reporting windows (7, 30, 90, 180 days).

- Cohort view: New users, returning users, drop-offs, by role family and business unit.

- Prompt intensity: Prompts submitted and prompts per active user, segmented by function, and tracked weekly to reduce noise.

Page 3: Workflow and app mix (work view)

Primary question: Which workflows are actually changing?

Include:

- App mix chart aligned to what your selected reporting source provides (admin usage report for licensed Copilot, Copilot Chat usage report for unlicensed Copilot Chat activity).

- Workflow tagging: A controlled list of 10 to 20 workflows (sales follow-up, incident triage, PRD review, release notes) with owners and KPI links.

- “Friction flags”: Segments where adoption is high but KPI movement is flat, which is often a process or data constraint.

Page 4: KPI drill-downs with drillthrough (evidence view) continued

CSAT drillthrough: Queue, category, severity band, channel, customer tier.

The key design choice for this page is to make drill-downs carry context from the executive view into a filtered detail view, without asking leaders to rebuild filters manually. Power BI report page drillthrough is designed for exactly this. It lets report viewers move from one page to another page filtered to a specific value, commonly triggered by right-clicking a visual element or using a button.

How to structure drillthrough so it supports decision-making

- One drillthrough page per decision area

Examples: Revenue detail, Cycle time detail, CSAT detail.

- Define drillthrough fields explicitly

Add fields such as Business Unit, Region, Role Family, Team, Product Line, Queue, or Repo. These become the “context keys” that filter the drillthrough page when a leader drills from a summary visual. The Power BI drillthrough feature works by filtering the target page to the specific value selected on the source page.

- Design the user flow with a clear return path

Microsoft’s drillthrough guidance recommends designing a flow where users view a report page, identify a visual element to analyze more deeply, right-click to drill through, do additional analysis, then return to the source page.

What to include on each KPI drillthrough page

- A “what changed” header with the exact segment context (for example: Region = EMEA, Role Family = Field Sales, Week Range = last 13 weeks).

- A trend panel that shows:

- The KPI trend (weekly)

- The Copilot cohort trend for the same segment (weekly)

- A marker showing when adoption reached a stable threshold (your program definition)

- A diagnostics panel that answers “why might this KPI not be moving yet?” Examples:

- Revenue: deal size mix, pipeline source shifts, approval delays

- Cycle time: PR size distribution, build failures, blocked work

- CSAT: severity mix, channel mix, staffing ratio changes

- A “next actions” block with role-based actions, tied to the constraint:

- Enablement gaps

- Workflow ownership gaps

- Data quality gaps (identity mapping, org attributes, workflow tagging)

This page is where your scorecard earns trust. It shows the evidence behind the KPI tile and highlights the most likely constraint, without over-claiming causality.

Page 5: Governance and risk (control view)

Primary question: Are we controlling access, protecting sensitive data, and keeping investigation capability as adoption scales?

This page is not a security console. It is a leadership control view that shows whether the program is meeting policy requirements, and whether the scorecard itself is safe to share with the right audience.

A. Access boundaries with Row-level security and workspace roles

Row-level security (RLS) in the Power BI service only restricts data access for users with Viewer permissions. It does not apply to Admins, Members, or Contributors. That detail matters because many scorecardsfail governance reviews simply due to workspace role choices.

A practical control summary on the page:

- Which audiences get access through Viewer role or app distribution.

- Which audiences have edit permissions and therefore are not subject to RLS in that workspace context.

B. Information protection with Microsoft Purview sensitivity labels

If your scorecardcontains user-level adoption signals or customer outcome data, classification matters. Microsoft documents how to enable sensitivity labels in Power BI (Fabric admin portal tenant settings) as part of information protection.

Governance page indicators that leaders understand:

- Percentage of scorecard artifacts (semantic model, report, app) with sensitivity labels applied.

Where export and share settings align with label policy, based on your tenant configuration.

C. Audit readiness for Copilot and AI activities

Microsoft documents that audit logs are generated for user interactions and admin activities related to Microsoft Copilot and AI applications, and that these activities are automatically logged as part of Audit (Standard).

For leaders, the governance page should answer:

- Is auditing enabled for the tenant and the services in scope?

- Do security teams have the right roles to search the audit log when needed? Microsoft states you must be assigned Audit Logs or View-Only Audit Logs roles in the Purview portal to search the audit log.

- Which Copilot-related investigation scenarios are in scope (for example, verifying who accessed certain resources in Copilot interactions, within policy boundaries).

D. Program controls that reduce risk without slowing delivery

Include a short checklist, built from your internal policy:

- Identity mapping completeness (UPN to HR ID)

- Org attribute completeness (role family, business unit, region)

- KPI data source ownership (who signs off on revenue, cycle time, CSAT tables)

- Refresh cadence and data latency note (so leaders do not treat partial days as final)

Conclusion: From Copilot adoption signals to measurable outcomes

The AI Accountability Scorecard is designed to help leaders move from “Are people using Copilot?” to “What business result is changing, and what action should we take next?” That shift matters because Microsoft 365 Copilot usage data is best interpreted as a management signal, not a real-time operational feed. Microsoft states that Copilot activity for a given day is typically available within 72 hours of the end of that day (UTC).

If you want to move quickly without introducing reporting risk, start with these steps:

- Set a measurement contract: Definitions, populations, time windows, KPI owners.

- Build weekly Copilot usage facts and treat the most recent days as incomplete due to reporting latency.

- Join to revenue, cycle time, and CSAT at weekly grain, segmented by role and org attributes.

- Publish with governance that matches Power BI security behavior (RLS plus correct workspace roles) and apply sensitivity labels to reports and semantic models.

- Run a weekly adoption review and a monthly KPI review where the scorecard drives license allocation, enablement focus, and workflow fixes.

At VBeyond Digital, we bring clarity and velocity to your digital initiatives by building this scorecard as a decision system: reliable telemetry, a maintainable model, and an operating cadence that converts insight into accountable action.

FAQs (Frequently Asked Question)

An AI Accountability Scorecard is a practical framework that links AI adoption to business outcomes. It tracks leading indicators (like enablement and usage quality), lagging indicators (like time saved or cycle-time reduction), and guardrails (like risk, privacy, and quality). The goal is to make AI value measurable, explainable, and repeatable—so you can see what’s working, what isn’t, and what to change.

The AI Accountability Scorecard measures Microsoft Copilot ROI by connecting Copilot activity to measurable productivity and performance metrics. It combines adoption signals (who’s using what, how often, in which workflows) with outcome metrics (hours saved, reduced rework, faster approvals, higher throughput, improved service levels). It then translates those outcomes into financial value while accounting for license costs, change-management investment, and confidence levels in the measurement.

In this context, the Microsoft 365 Copilot usage report focuses on Copilot features embedded across Microsoft 365 apps (like Word, Excel, PowerPoint, Outlook, and Teams) and how people use them. The Copilot Chat usage report focuses specifically on usage patterns in Copilot Chat (prompts, sessions, engagement), which can include broader knowledge of work beyond app-embedded actions. Together, they show where Copilot is used and how people interact with it.

Copilot usage alone doesn’t prove business value because activity is not the same as impact. High usage might reflect curiosity, uneven prompting quality, or work that doesn’t materially change outcomes. Value requires evidence of improved performance—like faster delivery, fewer errors, higher customer resolution rates, or reduced operating costs—plus attribution logic showing Copilot contributed meaningfully versus other factors.

Yes—Power BI can track Copilot adoption and business KPIs together by combining Copilot usage data with operational and financial datasets. You can build a unified model that slices outcomes by team, role, process, and time, and compares adopters vs. non-adopters (or before vs. after). The key is good data governance and a clear measurement design so the dashboard supports decisions, not just reporting.